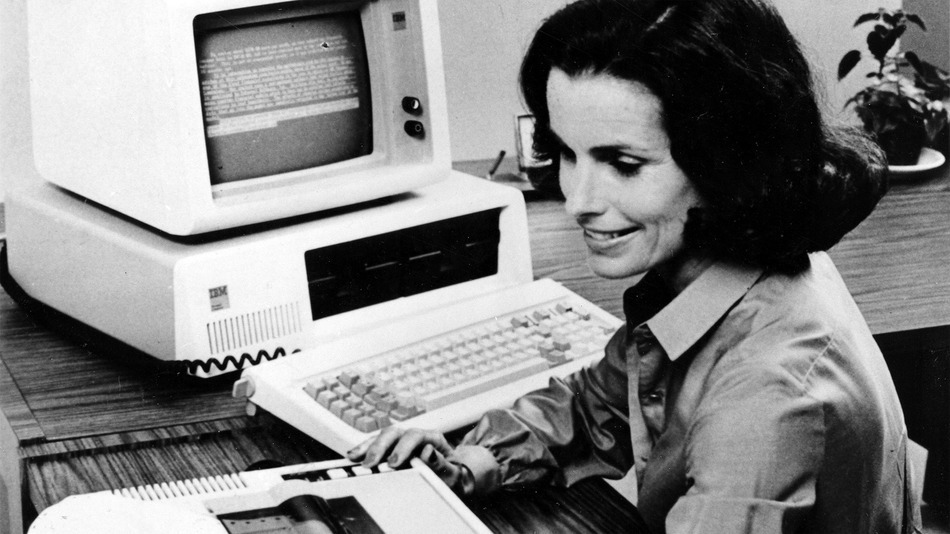

People used to be computers. That is, for hundreds of years, computing was the work of humans, and very often women. Then, in the mid-20th century, machines began to take on the bulk of computing work, and the definition of "computer" changed.

A computer was no longer just a human reckoner. Just the way a calculator was no longer a person who, as Webster's 1828 dictionary had defined it, "estimates or considers the force and effect of causes, with a view to form a correct estimate of the effects." In 70 or so years, a computer went from being a room-sized monstrosity that ran on pulleys and bulbs to a four-ounce touchscreen that's as ordinary as it is miraculous.

Now, leading computer scientists and technologists say the definition of "computer" is again changing. The topic came up repeatedly at a brain-inspired computing panel I moderated at the U.S. Capitol last week. The panelists — neuroscientists, computer scientists, engineers, and academics — agreed: We have reached a profound moment of convergence and acceleration in computing technology We have reached a profound moment of convergence and acceleration in computing technology, one that will reverberate in the way we talk about computers, and specifically with regard to the word "computer," from now on.

"It's like the move from simple adding machines to automated computing," said James Brase, the deputy associate director for data science at Lawrence Livermore National Laboratory. "Because we're making an architectural change, not just a technology change. The new kinds of capabilities — it won't be a linear scale — this will be a major leap."

The architectural change he's talking about has to do with efforts to build a computer that can act — and, crucially, learn — the way a human brain does. Which means focusing on capabilities like pattern recognition and juiced-up processing power — building machines that can perceive their surroundings by using sensors, as well as distill meaning from deep oceans of data. "We are at a time where what a computer means will be redefined. These words change. A 'computer,' to my grandchildren, will be definitely different," A 'computer,' to my grandchildren, will be definitely different," said Vijay Narayanan, a professor of computer science at Pennsylvania State University.

"There's a way in which the whole process of science and technology will change," said Peter Littlewood, the director of Argonne National Laboratory. "There is not going to be a single model for computing. We've had the linear, the serial one. We're developing the neuromorphic methods that are designed around pattern recognition... There will be a change, not just architecturally, but also in the way we integrate data that we are not doing now."

The dramatic change in computing that Littlewood describes has to do with modeling next-generation machines on the networked mechanisms of the human brain — an approach that, as the M.I.T. neuroscience professor James DiCarlo cautioned, has some major limitations. The first of which is that we don't actually know, in anywhere near a complete sense, how the brain works. Which means we can't yet build a machine model of the human brain. "The brain is a computer we don't yet know how to build," DiCarlo said. "It's an information processing device we don't yet know how to build. What type of device is it? That is the question. We're not ready to build machines that can perfectly emulate the brain because we don't know how it works."

And yet scientists know enough about the brain — that it relies on a tangle of billions of interconnected neurons, that it is energy efficient, that its data processing sophistication is in many ways unmatched by technology — to know that talking about the brain as a computer is more than just a useful metaphor. The fields of neuroscience and computer science are already feeding off of one another.

"The challenges of mapping the brain are comparable to the challenges of mapping the universe," Littlewood said. "We're now on the verge of being able to map the brain at a scale where you can see a synpase. And if you map the brain down to the scale of synapse, and you take all of that data, that's about a zettabyte of data. A zettabyte is about the annual information traffic over the worldwide Internet. It is a very big number. It is not an astronomical number — but it is a number that in the next decade, we will need to be able to deal with."

As the computers of the near future help humans adapt to larger piles of data, they'll also learn by observing human behavior. As the computers of the near future help humans adapt to larger piles of data, they'll also learn by observing human behavior. "So far, we have learned to adapt to computers," said Dharmendra Modha, the founder of IBM's Cognitive Computing group. "We use keyboards. We use our thumb to type into the smartphone. But given the advent of the brain-inspired computing and how it's going to integrate into modern computing infrastructure, computers will begin to adapt more and more to human beings."

That's a key point and one that contains a pleasant irony: As the definition of "computer" changes, it may soon evoke the older, more human definition of computing. Because while the computers of the future will still be machines, they'll be more human-like than ever before.

"That's our goal: To make a computer much more like a human being, in the sense that it integrates data and can make decisions," Littlewood told me. "So the future definition of computer may be like the original. It may be like a person after all."

Fuente: mashable.com